At first I struggled with the thought of an information ecology: How can something that is controlled by humans be an ‘equal’ ecology even taking into account dominant species? It was only when I deleted the imaginary line between human and machine that I was able to grasp this concept. What is important is important is the attitudes and perceptions of the people on the internet not the fact that humans could collectively shut down the internet on a whim. It is the attitudes of various groups of people on the internet that create the opposing forces in this ecology. It is these attitudes and technological advances (for example Napster) that are developing ‘ecology like’ and can be referred to as an information ecology. Short of shutting the internet down there is little that can be done to control the content. This means that a large number of various parties are influencing the evolution of the internet and communication sharing and fills the definition of an ‘Information Ecology’.

How might the metaphor of an ‘ecology’ impact on the way you think about, understand or use the Internet?

The term is clearly intended to carry the meaning that shows that the internet is developing without any particular plan or guideline and is evolving with each new dominant technology or attitude. Major sites such as Myspace; technologies like IRC and P2P; Leisure activities like Games; News sites, etc all act on this environment to evolve it a bit more with each passing day.

How are the concepts ‘information’ and ‘communication’ understood within the framework of an ‘information ecology’?

These are founding aspects as it is only through communication of information that attitudes and values that shape the internet are communicated. Without these there would be no ‘information ecology’ and the internet would cease to exist. By attacking the communication of information in the form of music the RIAA etc are attacking the founding laws and functions of the internet which is to communicate such things. It may be necessary to have some censorship of communication for societal goals (ie preventing child pornography) however lines must be drawn very carefully to avoid counter-productive censorship that would hinder this ‘ecology’. Arguing that it is a ‘ecology’ seems to indicate that it would be very difficult to shut these things down as resistance would come from within the ecology as we have seen in the example of P2P. As fast as the RIAA shuts down P2P networks and torrent sites (most recently torrentspy) more pop up to take their place.

Why don’t we talk of a ‘communication ecology’?

The term communication cuts the definition too narrowly. What is communicated is information and data without which the communication would be pointless. Communication itself is usually not enough to bring about a change or to influence the ‘ecology’ it is what is communicated that is crucial. It isn’t plain talking that has shaped the internet it is technologies and programs like Napster, Myspace, and games that have created this ecology.

Case Study: Peer to Peer

A main point where p2p has a great advantage over centralized distribution is in cost and bandwidth efficiency. By linking the individual nodes the host company eliminates the need for tremendous amounts of bandwidth that would otherwise be needed to support the level of data transfer and eliminates bottlenecks by avoiding a central distribution location. Costs of the network are also borne by the node (likely a home computer) itself. Modern p2p networks have gone from a ‘napster like’ distribution method utilizing a central server to a completely decentralized form which is virtually impossible to shut down.

First Generation P2P: Napster was a first generation p2p network as it used a centralized server to enable connections between nodes. Napster was quickly and relatively easily shut down by record companies as it maintained information on a central server.

Second Generation P2P: Gnutella was a second generation p2p network; however it quickly died due to bottlenecks as all the ‘napster refugees’ joined and overloaded the system. ‘Luckily’ the problem was resolved via FastTrack which allocated tasks to the servers allowing some to be used for indexing while others were used for transfers. Examples of 2nd Generation p2p are: Kazaa, eMule, and edonkey.

Third Generation P2P: 3rd generation p2p focused on anonymity which it achieved by routing information through numerous nodes making it hard to separate the downloader from the ‘innocent bystander’. This was a direct result of the increased threat from recording companies etc threatening to close p2p networks. Freenet, GNUnet, and Entropy are examples of 3rd generation p2p networks. They have not been as popular as 2nd generation p2p as they use much larger quantities of bandwidth due to excess routing of files.

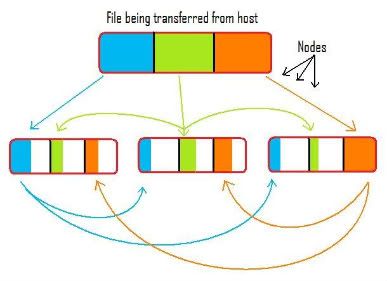

Fourth Generation P2P: The evolution to fourth generation can be defined by a change from sharing of packets as previously to ‘streaming media’ directly from node to node and a ‘swarming’ structure is used. Bittorrent is the best example of a 4th generation p2p network . Bittorrent requires a ‘torrent’ file to be uploaded to the tracker which describes the contents and location of the file. Sections of the file are then sent out to all downloaders using swarming that actually increases the speed of transfer (to many nodes individual nodes see no benefit) as per the diagram below.

As we can see the nodes themselves pass on the portions they have already downloaded from the host to each other node ensuring there is virtually unlimited capacity for transfer compared with other network structures. Theoretically it should take roughly the same amount of time to transfer the file to 1 node as it should to transfer the file to 1000 nodes (not considering hardware problems or data loss). Bittorrent is an extremely efficient method of transferring files to a huge number of nodes virtually simultaneously and could (and has partially) been harnessed for legal distribution of files.

I have to disagree with the author of the article: ‘Playing our Song?’ http://www.technologyreview.com/Biotech/13257/page1/ on one point though. I am happy to find my music and get a ‘community spirit’ from other websites such as Lastfm.com or just general chat sites. What I can’t agree with is the various forms of DRM and copyright protection found on many of these ‘legally downloaded’ files. I simply don’t trust the company’s putting code in my music. Recently I owned a Sony mp3 player which automatically converted my mp3 music (naturally all legally acquired by ripping my cd’s) into ATRAC format. As I was travelling I left all my originals at home and when my computer crashed and needed to be formatted I found I was stuck with useless files that could only be played on my Sony Mp3. When I subsequently put my mp3 player through the washing machine I was left with completely useless files that I couldn’t play in any way shape or form. If I had purchased these files I would be exceptionally angry at the wasted money. This highlights the reason I refuse to ‘legally download’ music from sites such as iTunes as I don’t believe my music is safe from their prying hands post downloading.

After reading the article: P2P networking An information-sharing Alternative I have come to the conclusion that P2P’s greatest strength is also its greatest weakness. Governments and companies / associations fear that users can communicate with each other and share unlimited amounts of information irrespective of attempted controls. The problem is that governments and for profit entities no longer are able to control media as people quickly and easily share anything they wish. As such they have lost the control they had over the people and their very existence is threatened. Being able to barter with someone from another country freely over the internet directly undermines the position and market that many of these entities control; the lack of policing also threatens them.

Preparing for the Future Shock:

It seems I am expected to speculate here as to what the future will bring… Actually I think the future will be very similar to what many movies already show us. The world will continue shrinking with increased travel speeds and efficiency, and data travel will speed up as well. Technologies such as mentally controlling computers and 3d screens are just around the corner with the first prototypes in production now. I think what will be the key issue for the future isn’t the increase in technology it’s the shrinking of the world. As cultures clash there is an increased possibility for wars etc. One of the primary conflict points is likely to be jurisdiction over the internet as countries are essentially forced to assimilate and joint governments / policing must be created. I think it’s inevitable that the internet will not remain as free as it is now as increasing cyber crime and cyber terrorism will necessitate a increasing police presence. Hopefully our freedom of speech will not be impaired in the process, and I personally hope that no excessively controlling country will be at the forefront of the new world.

Head Tracking

Projector tracking

3D TV

I think that the first four points really need not be discussed as they are givens. We will get faster and more portable broadband which will increasingly deliver high definition media. Increase security is also a byproduct of increased cyber crime and terrorism and will occur. Increasing AI is also a given, and I think robots will take over many human tasks. I think that it will have a negative impact on humanity. There is no need to show politeness or courtesy to a computer/robot and in the world where time is the only real commodity I can’t see people addressing computers with courtesy even if they are programmed to demand courtesy. This will likely lead to bad manners and weaken interpersonal relationships further damaging the family structure. As far as this semantic web has to go I think we have only seen the very beginning. The use of two codes one for human viewing and RDF inside html tags for machine viewing is I think a transitional procedure. Eventually I think ‘machine language’ will be used exclusively and computers and or robots will simply have a form of codec that will decode this language into a language people can understand on command. It will essentially be like Google on another level. Currently we command Google to find web pages containing keywords; In the future this will be done ‘intelligently’ rather than in a concrete keyword style. It may even skim through thousands of webpages relevant to the topic (with or without the specified keywords) and produce a referenced output presentation containing all valid arguments from these sites. I think for the ‘semantic web’ to truly become feasible; not only is a computer understood language necessary but AI must be significantly improved aswell.

Semantic Web Wikipedia

No comments:

Post a Comment